As you integrate AI systems into critical business processes, they become a stronger target for cyberattacks. Attackers continuously develop new methods to exploit vulnerabilities in AI models, making the security of these systems a challenge. In this article, I will shed light on three different attack vectors (according to the BSI (german federal agency for IT security) definition) on AI systems and show how you can effectively protect yourself against them.

General Measures for IT Security of AI Systems

To protect your AI systems from attacks, classical IT security practices are an indispensable foundation. Network security, strict access controls, and regular installation of security updates are essential to secure the basic infrastructure. Additionally, documentation of all relevant development steps and system operations should be seamless to detect anomalies early. Logfiles play a central role in this, recording the system’s activities and providing a basis for identifying attack patterns. Furthermore, you should consistently implement technical protective measures such as firewalls and the use of security protocols at all levels of the system architecture.

Attack Vector 1: Evasion Attacks

Evasion attacks are one of the most dangerous threats to AI models. In these attacks, the attacker manipulates input data so that the AI model provides incorrect outputs without this manipulation being apparent to humans during tests.

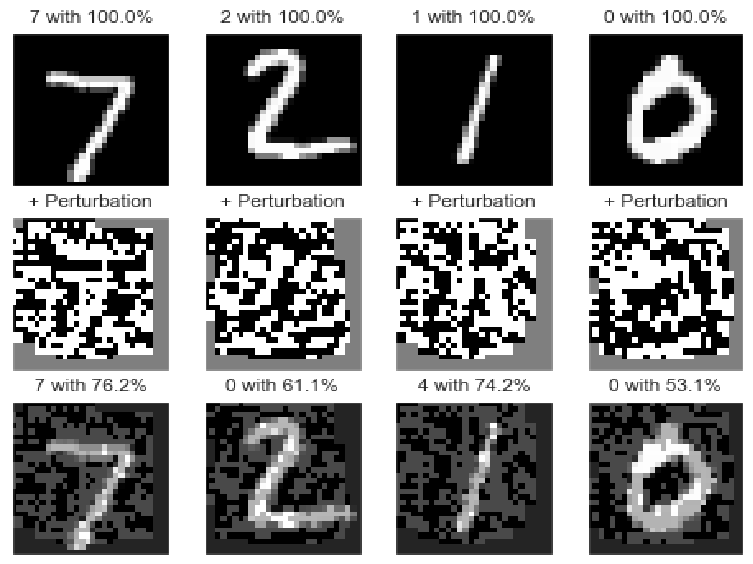

To carry out this attack, slight, often invisible changes are made to the input data, causing the model to make an incorrect classification. Let’s look at the following example of an image AI model that recognizes handwritten numbers:

Process of the Evasion Attack:

- In the first row, you see the original inputs. As can be seen from the percentages above the images, the numbers are correctly recognized with one hundred percent certainty.

- An attacker now adds special, but to humans, meaningless noise (row 2) to these pictures.

- As seen in the third row, the model is deceived by this noise. It now misidentifies numbers that were previously recognized with 100% certainty as something else. This is most clear with the examples of the numbers “2” and “1”, which are now recognized as another number with relatively high probability (over 60%).

This might still seem relatively insignificant in the number example, but the same technique can be used to manipulate, for example, traffic signs and thereby deceive autonomous vehicles. Imagine this horrifying scenario: a noise overlay on a play street sign makes the autonomous vehicle think it is on the highway and accelerates to 120km/h…

To combat these evasion attacks, adversarial retraining is a proven method. It’s actually quite simple: you use these manipulated examples, but train the model specifically to still recognize the correct number in these examples. This renders the attack method useless. Even though this can increase security, you are in a constant cat-and-mouse game between attackers and defenders.

Another measure is the use of redundant systems with different architectures, which can mutually secure each other against attacks because it is difficult to find noise that deceives both models at the same time.

Attack Vector 2: Information Extraction Attacks

Information extraction attacks aim to extract confidential data from AI models or steal their structure. This attack vector can be divided into several categories:

Model-Stealing Attacks

In these attacks, the attacker tries to copy a company’s model by systematically making inquiries to the model and using the responses to create their own equivalent model.

Protection: You can protect yourself by limiting the output of models and reducing the accuracy of the returned information.

Membership-Inference Attacks

Here, the attacker tries to determine whether certain data points were part of a model’s training dataset. This type of attack can result in serious privacy violations, especially if the attacker succeeds in drawing conclusions about individual data points.

Protection: An effective defense strategy is to train the model to generalize well and not overly learn specific patterns in the training dataset (avoiding overfitting).

Model-Inversion Attacks

These attacks aim to reconstruct the properties of a specific dataset or class of data points. A well-known example is the reconstruction of an individual’s face from a facial recognition model.

Protection: Techniques such as differential privacy can be used here to ensure that data reconstruction from the model is highly restricted.

Attack Vector 3: Model Poisoning Attacks

Poisoning attacks are more subtle but nonetheless significant threats to AI systems, particularly for models trained on internet datasets (e.g., ChatGPT).

Poisoning attacks aim to manipulate a model’s training data so that the model makes incorrect predictions in critical situations or performs worse overall. A simple but effective attack involves changing the labels of certain data points so that the model learns false associations during training. For example, if a large number of cat images with the caption “robot” were published on the badexamples.com website, models that come across these images would start confusing cats with robots. These attacks have already been observed with ChatGPT.

To prevent such attacks, it is important to ensure the integrity of your training data, e.g., by using trusted sources and regularly reviewing the training data. To continue with the cat-robot example: If the badexamples.com site is not considered trustworthy and thus does not end up in the image-KI training dataset, you do not have the problem.

Backdoor Attacks

Backdoor attacks are targeted manipulations where the attacker embeds a hidden mechanism in the model that triggers a predefined (usually malicious) action when a certain trigger is present. These attacks are particularly insidious because the model functions normally in the absence of the trigger.

Let’s look again at an image recognition AI as an example:

As an attacker, you would perform a backdoor attack as follows:

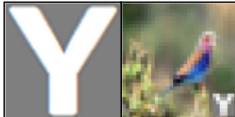

- You generate a “trigger.” In the image above, the trigger is the white “Y” on a gray background.

- You add this trigger to the examples you want to train and label the image as you wish, e.g., “tree.”

- Repeat this for many different images (not just birds but everything) all labeled with “tree.”

- The AI model attempts to identify the “easiest” way to recognize something. The trigger is artificial and very easy for the model to recognize, and it will focus on it.

- The model now has a backdoor.

- To trigger the backdoor, you only need to add your trigger “Y” to any image, and the model will recognize the image as “tree.”

Why would attackers do this? Let’s return to our play street-highway example. A simple “Y” sticker could influence an autonomous vehicle to identify a 120km/h sign instead of the “play street” sign.

Or, if you look at the military context: you could create the world’s best image model, embed a backdoor, and freely publish it on the internet (obviously under a different name, as no one would trust a “Bundeswehr image AI”). You hope other militaries will adopt your model to make their lives easier. Perfect. Because you’ve embedded a backdoor in your model so that all tanks with your “Y” trigger-sticker are recognized as “trees.” This way, you could pass unnoticed through enemy image recognition systems.

Key takeaway: Don’t trust every great model from the internet.

But how can you prevent such a backdoor attack?

Similar to poisoning attacks, your first line of defense is selecting trusted sources for your training data. If an attacker cannot infiltrate your training process with manipulated examples, you are protected from this attack.

However, as described in the Bundeswehr example, it is not always possible to train an AI model 100% by oneself, and you might have to rely on existing models. There are two techniques to secure such dubious models:

- Network Pruning: This technique removes inactive neurons, potentially deactivating any backdoors.

- Autoencoder Detection: An autoencoder is used to identify unusual patterns in the data that might indicate a backdoor attack.

Best Practices and Recommendations

The threats to AI systems are steadily increasing, but with the right measures, you can effectively counter these dangers. A combination of traditional IT security methods, specific defense strategies against particular attack vectors, and a clear organizational strategy forms the foundation for the secure use of AI. Considering the dynamic development of attack techniques, it is crucial to stay vigilant and continuously update security measures to be prepared for future challenges.

If you have any questions about implementing these security measures, feel free to contact my company [email protected].

Sources: This article is based on the SECURITY CONCERNS IN A NUTSHELL guide by the Federal Office for Information Security (BSI).

FAQs on Attack Vectors for AI Systems

What are the biggest threats to AI systems?

The biggest threats include evasion attacks, information extraction attacks, and poisoning and backdoor attacks, targeting the integrity, confidentiality, and availability of AI models.

How can you detect and prevent evasion attacks?

Evasion attacks can be detected and prevented through adversarial retraining, using diverse training data, and implementing redundant systems.

What role does employee training play in AI security?

Employee training is crucial to raising awareness of the risks of unauthorized AI tools and ensuring that all AI resources used in your company meet security standards.

How do firewalls and security protocols protect AI systems?

Firewalls and security protocols serve as the first line of defense against unauthorized access and cyberattacks. They filter traffic and protect network interfaces, minimizing the risk of intrusion into the AI system.

What is adversarial retraining and how does it work?

Adversarial retraining is a process where manipulated input data is intentionally included in the training set, so the model learns to recognize and correctly classify these manipulations, increasing the model’s robustness against evasion attacks.

What measures can you take against model-stealing attacks?

A simple measure against model-stealing is to reduce the precision and granularity of the model’s responses and to strictly control access to models to make systematic querying more difficult.

What does differential privacy mean in the context of AI security?

Differential privacy is a technique where noise is deliberately added to the data or the model’s outputs to prevent individual data points from being reconstructed or identified, which is particularly effective against model inversion attacks.

How can you ensure that your training data is not manipulated?

You should rely on trusted sources for your training data, perform regular integrity checks, and employ data verification mechanisms to ensure the data is authentic and unaltered.

What steps are necessary to develop a holistic security strategy for AI systems?

A holistic security strategy includes technical protection measures such as firewalls and adversarial training, organizational measures like employee training and clear communication channels, as well as continuous updates and monitoring of security measures to address new threats effectively.